As artificial intelligence (AI) and machine learning (ML) technologies continue to advance, their integration into operating systems (OS) is transforming how we interact with and utilize computing resources. This integration enhances performance, improves security, and provides smarter, more adaptive user experiences. This article explores the impact of AI and ML integration on operating systems, highlighting the benefits, challenges, and examples of how these technologies are shaping modern OS environments.

1. Enhancing Performance with AI and ML

Predictive Resource Management: AI and ML algorithms can predict and manage system resources more effectively. By analyzing historical data and usage patterns, these algorithms can anticipate peak loads and optimize resource allocation. This predictive capability helps in balancing workloads, reducing latency, and improving overall system efficiency.

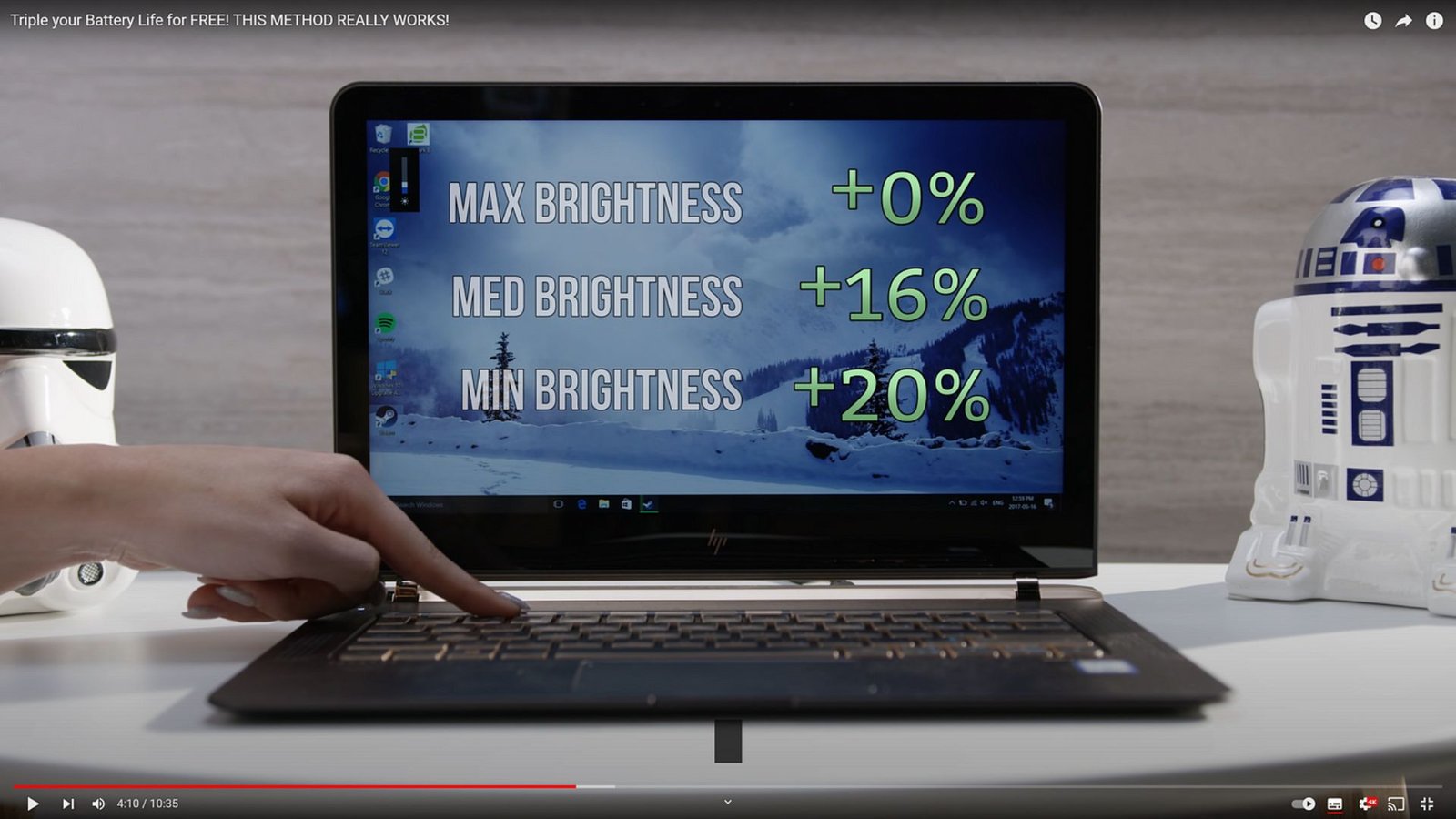

Dynamic Optimization: Machine learning models can dynamically adjust system settings based on real-time performance data. For instance, AI can optimize power consumption by adjusting CPU and GPU performance based on current tasks, which extends battery life in laptops and improves energy efficiency in data centers.

Application Performance: AI can enhance the performance of applications by optimizing their execution. For example, ML algorithms can analyze application usage patterns and adjust parameters to improve responsiveness and reduce lag, providing a smoother user experience.

2. Improving Security through AI and ML

Threat Detection and Response: AI and ML are increasingly used to identify and respond to security threats. Machine learning models can analyze vast amounts of data to detect unusual patterns that may indicate malware or other cyber threats. These models can provide real-time alerts and automatic responses to mitigate potential risks.

Behavioral Analysis: AI-driven security systems use behavioral analysis to monitor user and system activities. By establishing a baseline of normal behavior, these systems can detect anomalies that may signify a security breach or insider threat. This proactive approach helps in preventing unauthorized access and protecting sensitive data.

Automated Patch Management: AI can automate the patch management process by identifying vulnerabilities and deploying updates. Machine learning algorithms can predict which vulnerabilities are most likely to be exploited and prioritize patching efforts accordingly, enhancing overall system security.

3. Enhancing Usability with AI and ML

Smart Assistants and Automation: AI-powered virtual assistants, such as Microsoft’s Cortana and Apple’s Siri, integrate with operating systems to offer personalized assistance and automate routine tasks. These assistants use natural language processing (NLP) to understand and respond to user commands, making interactions more intuitive and efficient.

Adaptive User Interfaces: Machine learning enables adaptive user interfaces that adjust based on user preferences and behavior. For example, an OS can modify its layout, suggest relevant applications, or automate frequently performed tasks based on individual usage patterns, enhancing the overall user experience.

Accessibility Features: AI and ML are improving accessibility by offering features such as speech-to-text, text-to-speech, and visual recognition. These technologies make computing more inclusive for users with disabilities, allowing them to interact with their devices in new and more effective ways.

4. Challenges and Considerations

Data Privacy: Integrating AI and ML into operating systems raises concerns about data privacy. AI systems require access to large amounts of user data to function effectively, which can lead to potential privacy issues. Ensuring that data is handled securely and in compliance with privacy regulations is essential.

Algorithmic Bias: AI and ML models can inadvertently introduce biases based on the data they are trained on. This bias can affect decision-making processes and user experiences. It is crucial to develop and train algorithms using diverse datasets and to regularly review and adjust models to minimize bias.

Resource Consumption: AI and ML processes can be resource-intensive, requiring significant computational power and memory. Balancing the benefits of these technologies with their impact on system performance and resource consumption is an ongoing challenge.

5. Examples of AI and ML Integration in Operating Systems

Windows: Windows integrates AI through features like Cortana, which provides voice-activated assistance, and Windows Defender, which uses machine learning for advanced threat detection. The OS also incorporates predictive text and automatic photo tagging through AI technologies.

macOS: macOS leverages AI with features like Siri for voice commands and the Photos app’s machine learning-based photo recognition. The OS also uses AI to optimize battery life and performance based on user behavior.

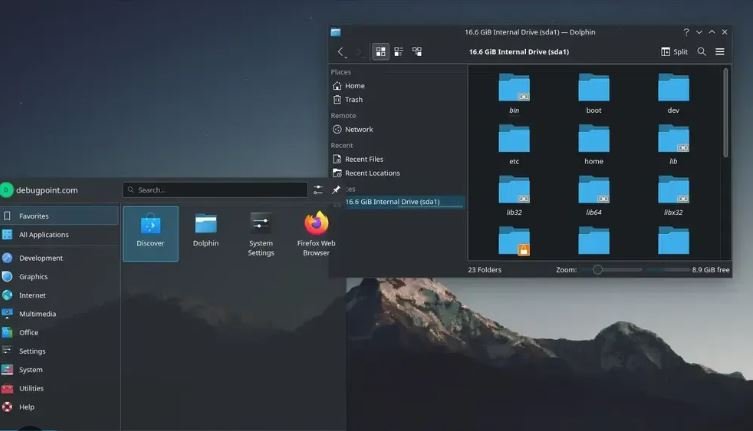

Linux: Many Linux distributions incorporate AI tools and frameworks, such as TensorFlow and PyTorch, for developing and deploying machine learning models. AI integration in Linux is often used for server management, performance optimization, and security enhancements.

Android: Android utilizes AI for features like Google Assistant, which offers voice-based interactions, and adaptive battery and app management, which optimizes power consumption and performance based on usage patterns.

Chrome OS: Chrome OS integrates AI for smart features like predictive search, automatic updates, and enhanced security through Google’s AI-driven threat detection systems.

Conclusion

AI and machine learning integration into operating systems is revolutionizing how we manage and interact with computing environments. By enhancing performance, improving security, and increasing usability, these technologies offer significant benefits to users and organizations. However, addressing challenges related to data privacy, algorithmic bias, and resource consumption is crucial to fully realizing the potential of AI and ML in operating systems. As these technologies continue to evolve, they will play an increasingly important role in shaping the future of computing.