Customizing and Building Kernels: Kernel customization and building are advanced topics in operating system development that allow developers to tailor the operating system’s core functionality to meet specific needs. This process involves modifying the kernel’s source code, configuring kernel options, and compiling the kernel to create a customized version suited to particular hardware or use cases. This article provides a detailed overview of kernel customization and building, including essential steps and considerations.

Understanding the Kernel

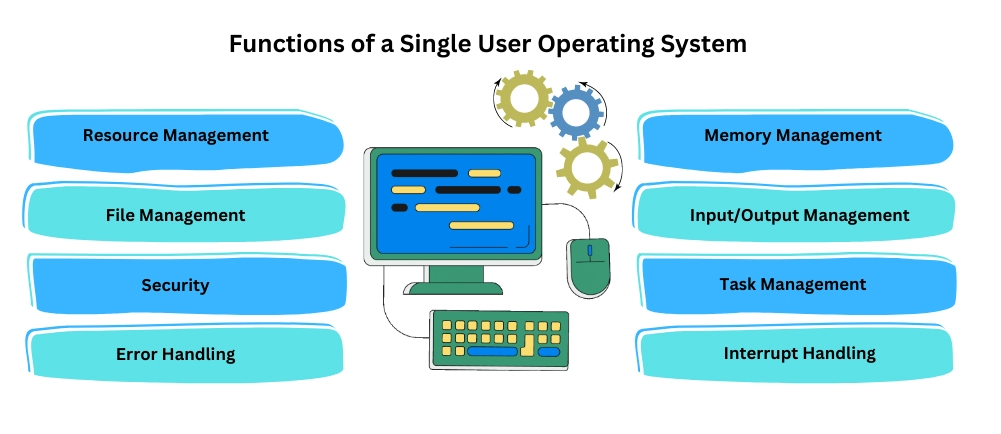

The kernel is the central component of an operating system that manages hardware resources, provides system services, and handles communication between software and hardware. Customizing the kernel allows developers to optimize performance, add new features, or support specific hardware that is not natively supported by the standard kernel.

Why Customize and Build Kernels?

- Performance Optimization: Tailoring the kernel to the specific needs of your hardware or applications can improve system performance and efficiency.

- Hardware Support: Adding or updating drivers and support for specific hardware components that are not included in the default kernel.

- Feature Enhancement: Implementing new features or experimental functionalities that are not available in the standard kernel release.

- Security Improvements: Customizing the kernel to include additional security patches or configurations that address specific security concerns.

Steps to Customize and Build Kernels

- Obtain the Kernel Source Code:

- Download Source Code: Obtain the kernel source code from official repositories or distribution sources. For Linux, the source code can be downloaded from the Kernel.org website or through your Linux distribution’s repositories.

- Verify Integrity: Ensure the integrity of the downloaded source code by verifying checksums or signatures provided by the source.

- Set Up the Development Environment:

- Install Development Tools: Ensure that you have the necessary development tools and libraries installed. This typically includes compilers (e.g., GCC), build tools (e.g., Make), and other dependencies required for kernel compilation.

- Prepare the Build System: Configure your build environment, including setting up appropriate directories and environment variables.

- Configure the Kernel:

- Kernel Configuration Tool: Use a kernel configuration tool to set kernel options and features. Common tools include

make menuconfig,make xconfig, ormake nconfig. These tools provide a graphical or text-based interface for selecting and configuring kernel options. - Select Options: Choose the features, drivers, and modules you want to include or exclude in your custom kernel. Configuration options can range from hardware support to file systems and network protocols.

- Save Configuration: Save your configuration to a file (typically

.config) to ensure that your choices are preserved for the build process.

- Kernel Configuration Tool: Use a kernel configuration tool to set kernel options and features. Common tools include

- Modify the Kernel Source Code (if needed):

- Edit Source Code: If you need to make custom modifications, such as adding new features or fixing bugs, edit the kernel source code as required. Ensure that your changes are well-documented and tested.

- Apply Patches: Apply any patches or updates that are relevant to your customization. This might include security patches, bug fixes, or new feature implementations.

- Build the Kernel:

- Compile the Kernel: Use the

makecommand to compile the kernel. This process involves compiling the kernel source code into a binary format that can be loaded and executed by the operating system. - Build Modules: If you have selected additional kernel modules, compile them separately using the

make modulescommand. - Verify Build: Check the build process for errors or warnings. Review the build logs to ensure that the kernel and modules have been successfully compiled.

- Compile the Kernel: Use the

- Install the Kernel:

- Install Kernel Binary: Copy the compiled kernel binary (usually

vmlinuz) to the appropriate directory (e.g.,/boot). This directory typically contains the kernel and associated files required for booting. - Install Modules: Install any kernel modules to the appropriate directory (e.g.,

/lib/modules/$(uname -r)). - Update Bootloader: Update your bootloader configuration (e.g., GRUB) to include the new kernel. Ensure that the bootloader can recognize and boot into the custom kernel.

- Install Kernel Binary: Copy the compiled kernel binary (usually

- Test the Custom Kernel:

- Reboot the System: Reboot your system to load the new custom kernel. Monitor the boot process for any issues or errors.

- Verify Functionality: Test system functionality, including hardware support, performance, and stability. Ensure that all desired features and configurations are working as expected.

- Troubleshoot: Address any issues or problems encountered during testing. Use kernel logs and debugging tools to identify and resolve issues.

- Maintain and Update:

- Monitor Updates: Keep track of updates and patches for the kernel and apply them as needed. Regular maintenance ensures that your custom kernel remains secure and up-to-date.

- Document Changes: Document any customizations and modifications made to the kernel. This documentation helps in maintaining the kernel and troubleshooting issues.

Challenges in Kernel Customization

- Complexity: Kernel development requires a deep understanding of system architecture and low-level programming. Customizing and building kernels can be complex and error-prone.

- Compatibility: Ensuring compatibility with hardware and software components requires thorough testing and validation.

- Security: Modifying the kernel introduces potential security risks. It is essential to apply best practices for security and conduct rigorous testing.

Conclusion

Customizing and building kernels offer powerful capabilities for optimizing and tailoring operating systems to specific needs. By understanding the kernel’s role, following structured steps for customization, and addressing potential challenges, developers can create robust and efficient kernels that enhance system performance, support unique hardware, and incorporate new features. Mastering these techniques opens opportunities for advanced system programming and innovation in operating system development.